Google's Parti Generator Relies On 20 Billion Inputs To Create Photorealistic Images

Google's Parti Generator Relies on 20 Billion Inputs to Create Photorealistic Images

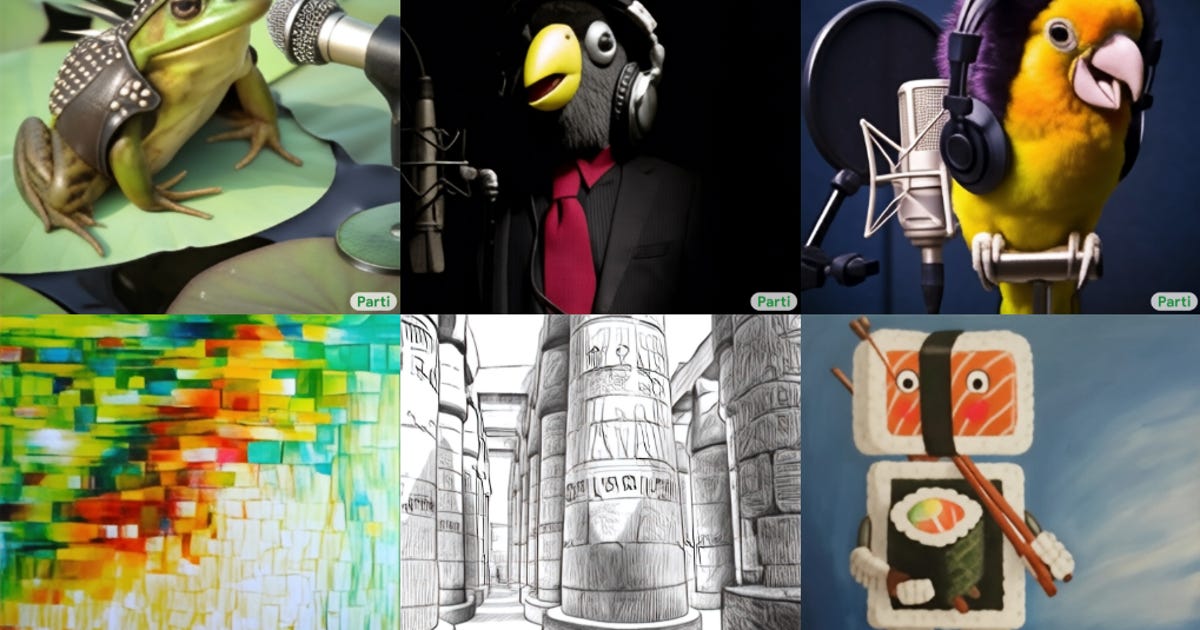

Google unveiled Thursday its Parti text-to-image computer model, which renders hyperrealistic images by studying tens of billions of inputs.

Pathways Autoregressive Text-to-Image, or Parti, studies sets of images, which Google calls "image tokens," using them to construct new images, the search giant said on a research website. Parti's images become more realistic when it has more parameters -- tokens and other training material -- to review. The model studies 20 billion parameters before generating a final image.

Parti differs from Imagen, a text-to-image generator that Google designed to use diffusion learning. The process trains computer models by adding "noise" to an image so that it's obscured, sort of like static on a television screen. The model then learns to decode the static to re-create the original image. As the model improves, it can turn what looks like a series of random dots into an image.

Google isn't releasing Parti or Imagen to the public because AI data sets carry the risk for bias. Because the data sets are created by human beings, they can inadvertently lean into stereotypes or misrepresent certain groups. Google says both Parti and Imagen carry bias toward Western stereotypes.

Google referred to a company blog post when asked to comment on this story.

The search giant has invested heavily in artificial intelligence as a way to improve its services and develop ambient computing, a form of technology so intuitive it becomes part of the background. At its I/O developer conference in May, CEO Sundar Pichai said AI is being used to help Google Translate add languages, create 3D images in Maps and condense documents into quick summaries.

Parti and Imagen aren't the only text-to-image models around. Dall-E, VQ-GAN+CLIP and Latent Diffusion Models are other non-Google text-to-image models that have recently made headlines. Dall-E Mini is an open-source text-to-image AI that's available to the public, but is trained on smaller datasets.

Source